Hey everyone! Ever feel like you're swimming in a sea of tech jargon? AI this, AI that... it can be overwhelming! Today, we're going to tackle one of those buzzwords that gets thrown around a lot: "intelligent agent in AI". Sounds fancy, right? But trust me, it's not as complicated as it seems. By the end of this post, you'll not only understand what it is, but you'll also see how it's already making an impact in the real world.

Think of an intelligent agent as a super-smart assistant, but digital. It's a piece of software that can make decisions and take action on your behalf. But unlike your average software, it's designed to be more, well, intelligent. It can learn, adapt, and even problem-solve. Pretty cool, huh?

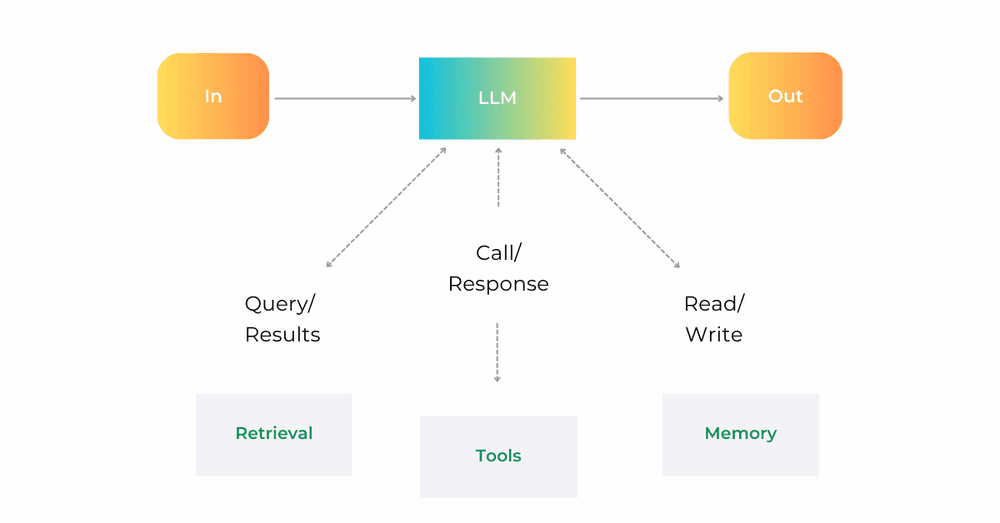

The Building Blocks: The augmented LLM

So, how do we build these intelligent agents? The foundation is something called an augmented LLM (Large Language Model). Think of an LLM as the brain of the operation, a super-advanced system that can understand and generate human-like text. But even the smartest brain needs tools! That's where "augmentation" comes in. We give the LLM extra capabilities like:

Retrieval: The ability to search for information.

Tools: Like giving it a calculator, a calendar, or access to other programs.

Memory: So it can remember past interactions and learn from them.

The cool part? Modern LLMs are getting so good that they can actively choose when and how to use these tools. They can decide when to search for information, which tool is best for the job, and what information to remember.

Think of it like this: You're asking your smart assistant to plan a trip. It wouldn't just randomly spout facts, right? It would use its "retrieval" ability to look up flights and hotels, use a "calendar" tool to check dates, and remember your past travel preferences from its "memory".

Making it Work: Different Approaches

Now, there's more than one way to build an intelligent agent. Let's look at two common approaches:

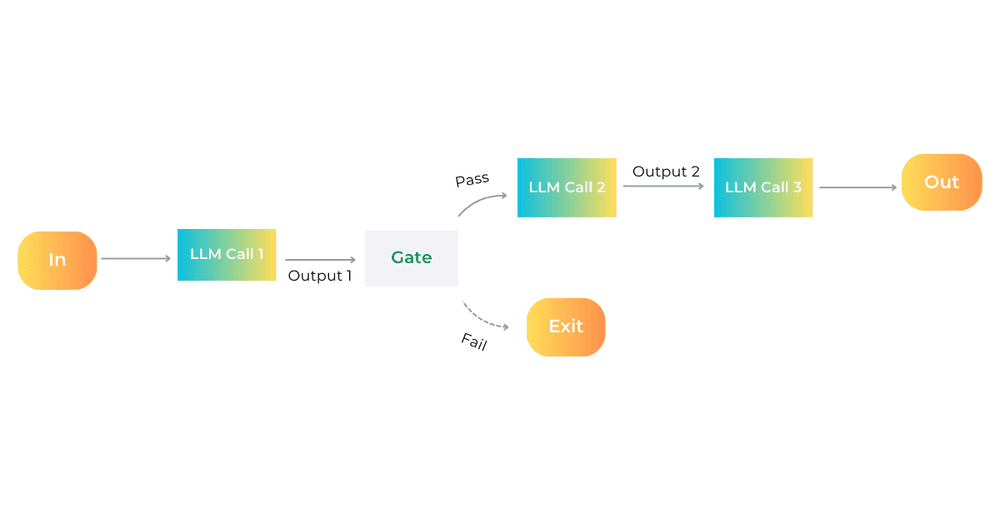

1. Prompt Chaining: Step-by-Step Smartness

Imagine you're baking a cake. You wouldn't just throw all the ingredients in at once, would you? You'd follow the recipe step-by-step. Prompt chaining is similar. We break down a big task into smaller, easier steps for the LLM to handle. Each step builds on the last, and we can even add checks along the way to make sure everything is on track.

When is this useful? When you have a task that can be easily divided into clear steps.

Example:

Let's say you want to write a marketing email and then translate it into Spanish. You'd first have the LLM write the email in English. Then, you'd have it translate that email into Spanish. Two clear steps, one smooth process.

Or let's say you need to create a large document. You can have the LLM generate the outline. Then you check the outline. Then you have the LLM write the document based on that outline.

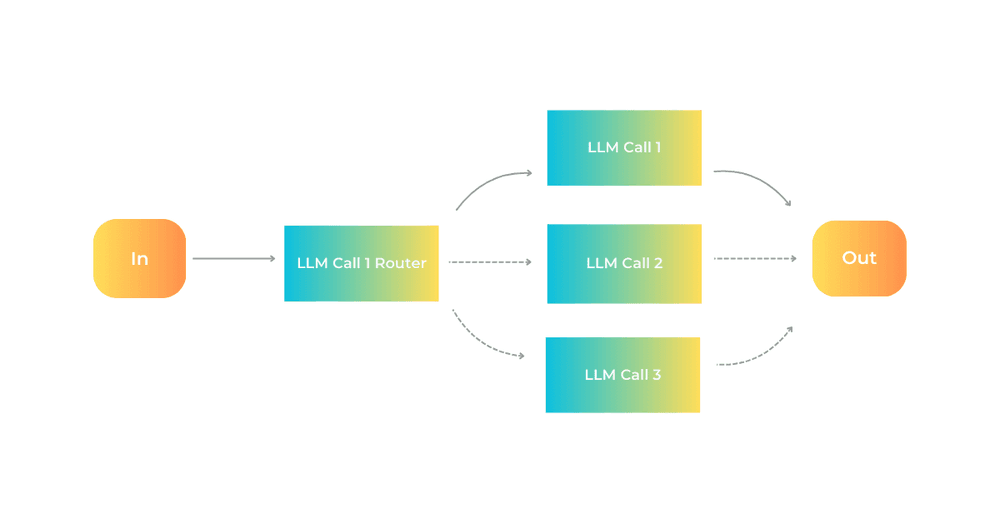

2. Routing: Sending Tasks to the Right Expert

Think of a busy customer service center. When you call, they don't just throw you to any random agent, right? They figure out what your issue is and then route you to the person best equipped to help. Routing in AI does the same thing.

The LLM analyzes the task and then directs it to a specialized tool or process. This is like having a team of experts, each with their own strengths.

When is this useful? When you have complex tasks that can be categorized into different types.

Example:

Imagine a customer service chatbot. It can route general questions to one process, refund requests to another, and technical support issues to a completely different one. Each of these processes can be optimized to handle its specific type of query.

Also, an AI could route easy questions to smaller models, like Claude 3.5 Haiku, and hard questions to bigger models, like Claude 3.5 Sonnet. This way, the AI optimizes for cost and speed.

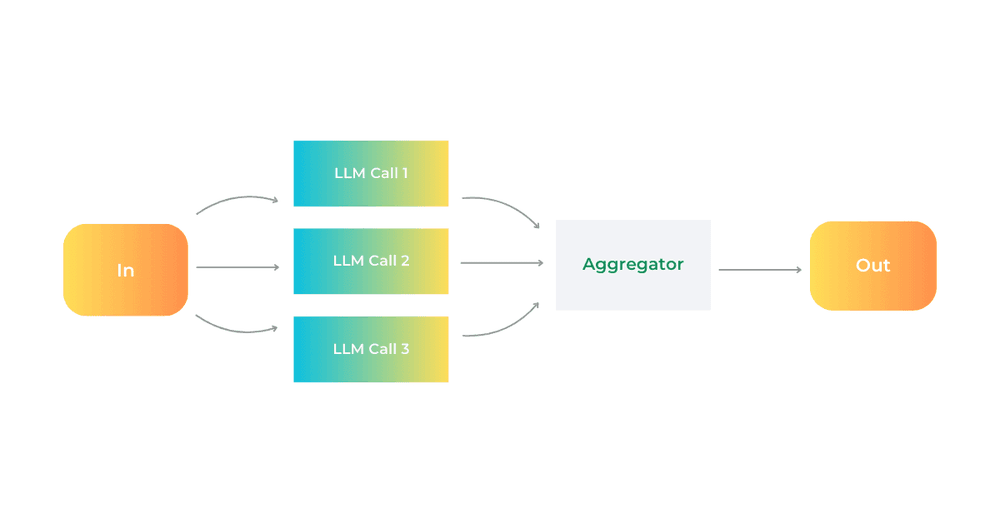

3. Parallelization: Doing Many Things at Once

Imagine you're a master chef juggling multiple dishes in a busy kitchen. You wouldn't just cook one dish at a time, start to finish, right? You'd have different things simmering, baking, and frying all at once! That's the idea behind parallelization. We can have our intelligent agent work on different parts of a task simultaneously, and then combine the results.

There are two main ways to do this:

Splitting (Segmentation): Like dividing a big project among team members. We break the task into smaller, independent chunks that the LLM can work on in parallel.

- Example: Let's say we want to build a super-secure chatbot. We can have one LLM handle user questions while another LLM simultaneously scans those questions for anything inappropriate or harmful. This is way more efficient than having one LLM do both jobs. Each LLM can focus on what it does best.

- Example: Imagine you are evaluating how well an LLM is performing. You can have different instances of the LLM grading different aspects of performance simultaneously.

Voting: Like asking a group of friends for their opinion. We have the LLM tackle the same task multiple times, getting slightly different perspectives each time.

- Example: Imagine you need to check if a piece of code has security flaws. You could have multiple LLMs (each using a slightly different approach) analyze the code and flag any potential issues. By combining their "votes," you get a more reliable result.

- Example: You could have multiple LLMs analyze a text to see if it is inappropriate. By asking multiple LLMs, you can fine-tune how sensitive you want the system to be.

When is this useful? When you can speed things up by working on parts of a task in parallel, or when you need multiple perspectives to ensure accuracy.

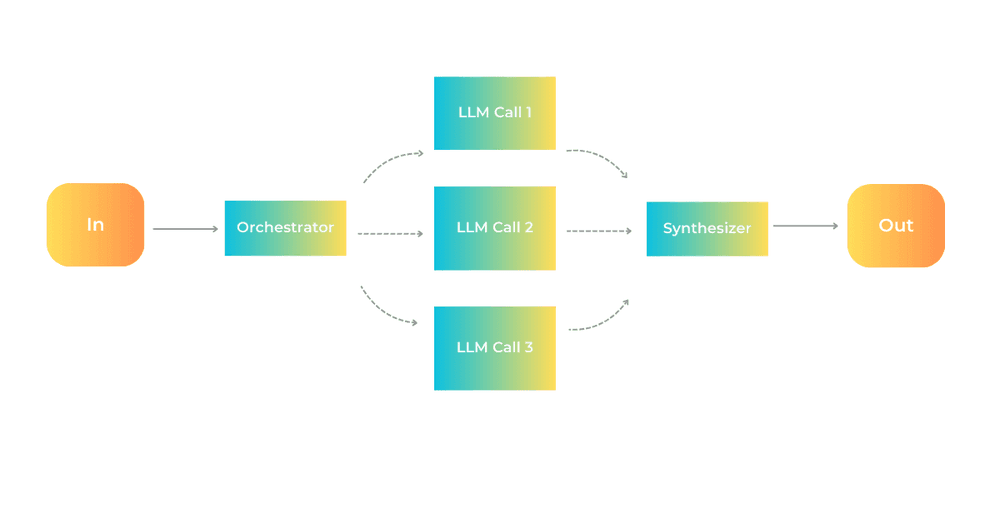

4. Orchestrator-Workers: The AI Project Manager

This is where things get really interesting. Imagine a project manager who can not only delegate tasks but also figure out what tasks need to be done in the first place. That's what an orchestrator-workers setup is like.

We have a central LLM (the orchestrator) that breaks down a complex task, assigns those smaller tasks to other LLMs (the workers), and then puts all the results together.

When is this useful? For really complex tasks where you can't pre-define all the steps.

Example:

Think about complex coding projects. The orchestrator LLM could decide which files need to be changed and what changes are needed, and then assign those changes to worker LLMs.

Or a research project. The orchestrator can gather information from multiple sources. It can analyze it, and, based on that analysis, request additional information from the worker LLMs.

5. Evaluator-Optimizer: The AI Feedback Loop

Ever wish you had an editor who could instantly give you feedback on your writing and help you improve it? That's the idea behind the evaluator-optimizer workflow.

One LLM generates something (like a piece of text), and another LLM acts as a critic, providing feedback and suggestions. Then, the first LLM uses that feedback to refine its work. They go back and forth, like a writer and editor polishing a draft.

When is this useful? When you have clear criteria for what makes a good result, and when iterative refinement leads to measurable improvements.

Example:

Translating a poem. The first LLM might translate the words, but the evaluator LLM could point out nuances or cultural references that were missed. The first LLM then refines the translation based on this feedback.

Complex search tasks may require multiple rounds of searching. The evaluator LLM will decide when additional searching is necessary.

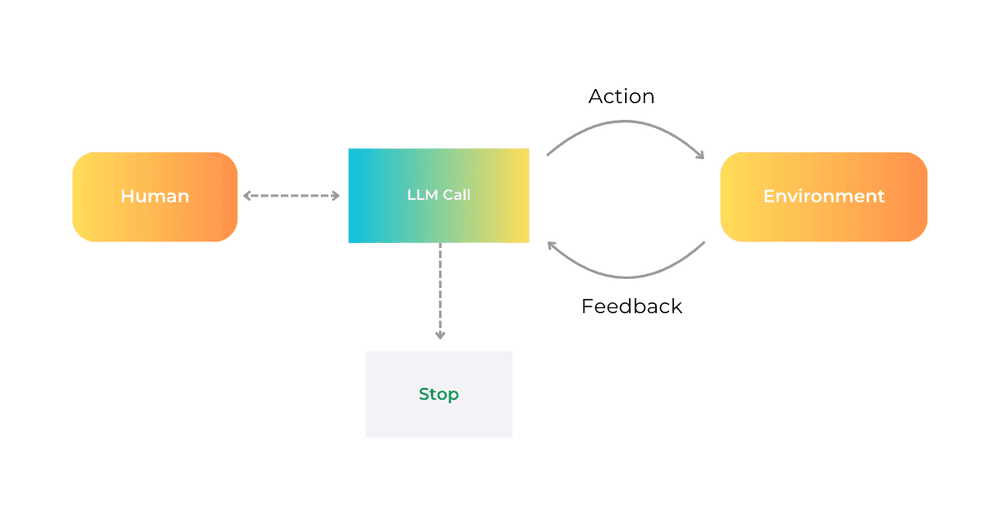

Meet the Autonomous Agents: AI That Gets the Job Done

We've talked about how intelligent agents use tools and different workflows to accomplish tasks. But what if an agent could not just follow instructions but figure out the instructions on its own? That's the power of autonomous agents.

Think of them as the ultimate AI assistants. You give them a goal, and they take care of the rest, planning and executing tasks with minimal human intervention. They can even ask you for clarification if needed! They are like super-efficient digital workers who can figure things out and get stuff done.

How Do They Work Their Magic?

Autonomous agents are built on the foundation of powerful LLMs. These LLMs have gotten really good at a few key things:

- Understanding Complex Instructions: They can grasp the nuances of human language, even when we're not super specific.

- Reasoning and Planning: They can think through a problem, break it down into steps, and create a plan of action.

- Using Tools Effectively: They know when and how to use the tools at their disposal (like searching the web, using software, accessing databases, etc).

- Recovering from Errors: If something goes wrong, they can adjust their approach and try again.

It's important to note: These agents need to constantly check in with the "real world" (we call it "ground truth") to make sure they're on the right track. For example, they need to see the results of a tool they used or confirm that a line of code they wrote works as intended.

Are They Useful?

Autonomous agents shine when dealing with open-ended problems where you can't predict every single step in advance. They're also great when you need an AI to handle multiple turns in a conversation or task and you can trust its decision making.

Examples in Action:

- A Coding Agent: This agent can tackle complex coding tasks, even those that involve modifying multiple files based on a simple description. This is like having a super-efficient coding buddy who can handle the heavy lifting! They reported that this agent can solve SWE-bench tasks.

- "Using a Computer" Agent: This is a reference implementation where Claude (Anthropic's AI) can actually use a computer to complete tasks. This agent can use a computer like a human.

Important Considerations:

Because these agents are so autonomous, they can be more expensive to run, and there's a higher chance of errors piling up if something goes wrong early on. That's why thorough testing and safeguards are essential. It's like giving someone a lot of responsibility – you need to make sure they're well-prepared!

The Future is Agentic!

While advanced workflows like parallelization and orchestrator-workers are becoming increasingly common in software today, fully autonomous agents are still relatively new. Many experts believe that 2025 could be a breakout year for these agents as the technology matures and becomes more reliable. We are calling this collection of techniques "agentic systems".

The Takeaway: Keep it Simple, Iterate, and Measure

The key to success with AI isn't about building the most complicated system. It's about building the right system for your needs. Start simple, test thoroughly, and only add complexity when it truly improves results.

When building these agents, remember these principles:

- Simplicity: Keep the design as straightforward as possible.

- Transparency: Make it clear how the agent is making decisions.

- Careful Design of the Agent-Computer Interface (ACI): Make sure the agent can interact with the computer and its tools effectively.

The Bottom Line

Intelligent agents, especially autonomous ones, are poised to revolutionize the way we work and interact with technology. By understanding the principles behind them and approaching their development thoughtfully, we can unlock their incredible potential and create a future where AI truly empowers us to achieve more.

What are your thoughts on autonomous agents? Are you ready for a future where AI can handle complex tasks with greater independence? Share your thoughts in the comments!

Reference: Anthropic