GenAI?

What is GenAI?

Generative AI, or GenAI, is a fascinating branch of artificial intelligence. It’s the technology behind those chatbots you’ve probably interacted with online, the AI art generators that have taken social media by storm, and even some of the tools you use at work. At its core, GenAI is all about creating: generating text, images, music, you name it. In simple terms, you can think of Gen AI as technology that tries to mimic the processes that happen in the human brain.

How does GenAI work?

Imagine you're trying to predict the next word in a sentence. If the previous words were "1,2,3,4,5" you'd probably guess "6" right? That's because your brain has learned patterns in language. GenAI works in a similar way, but on a much larger scale. It learns patterns from massive amounts of data and uses those patterns to generate new content. Imagine you're trying to predict the next word.

LLMs

The Power of Large Language Models (LLMs)

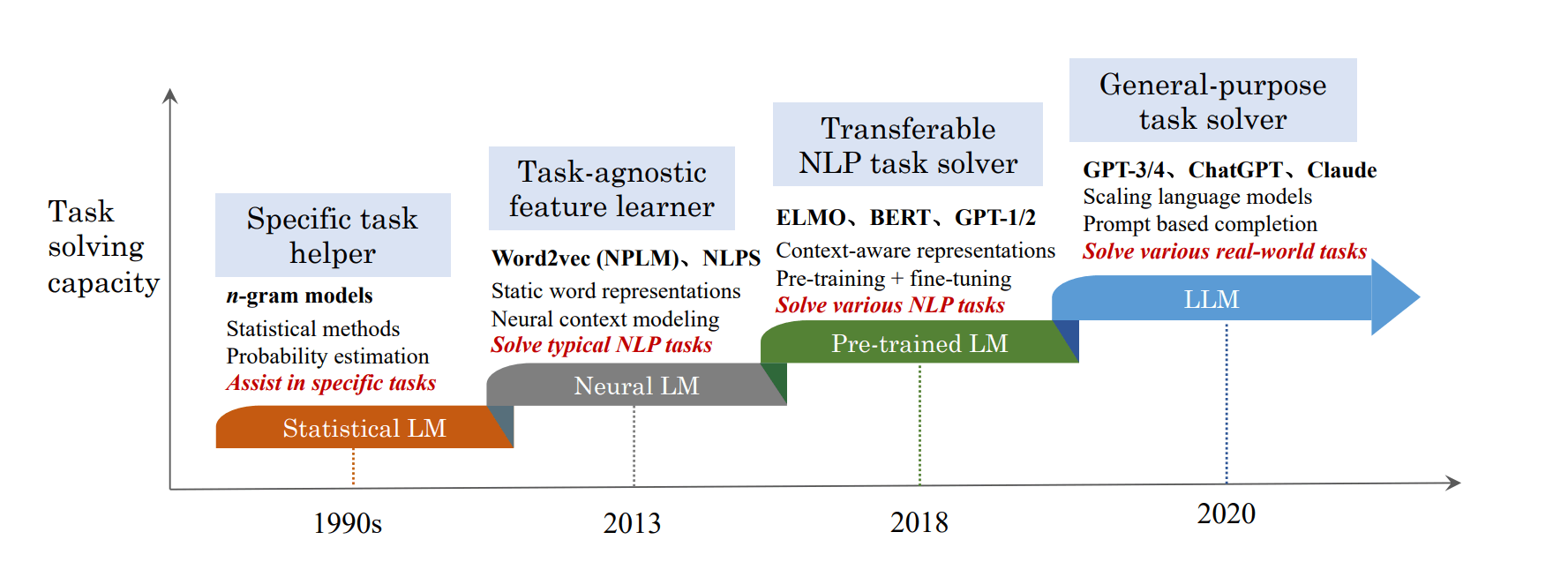

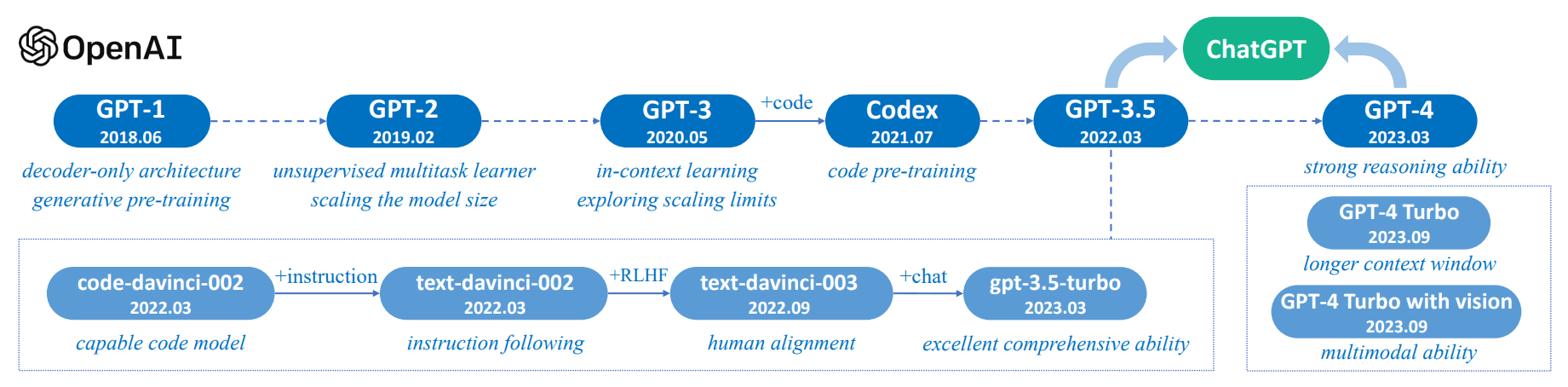

To do that, Gen AI works on something called a Large Language Model (LLM). Some of the most well-known LLMs include OpenAI's GPT-4, Google's LaMDA, and Meta's LLaMA.

The goal of LLM is to understand the commands that users enter, and then generate a coherent, contextual response that provides value that satisfies the user's needs.

These models have been fed a tremendous amount of information, allowing them to understand and respond to a wide range of prompts in a way that seems remarkably human.

Architecture of the LLM

The architecture of LLMs is based on a special type of neural network called a Transformer. Think of a Transformer as a super-smart language whiz. It has two key superpowers:

With these superpowers, Transformers can process and understand not just single sentences, but entire paragraphs, articles, or even books. They can grasp the meaning of words in context, figure out how different parts of a text relate to each other, and even generate creative new text that sounds like it was written by a human. This is why LLMs are so powerful and versatile. They can be used for a wide range of tasks, from translation and summarization to question answering and creative writing.

How are LLMs trained?

The process of training a model can be divided into two stages: pre-training and fine-tuning. After going through these two processes, LLM will become a know-it-all professor with top-notch language skills.

Pre-training: Pre-training is like teaching a language model the basics of language. It's exposed to massive amounts of text data, like books, articles, and websites. This helps it learn grammar, vocabulary, and how words relate to each other.

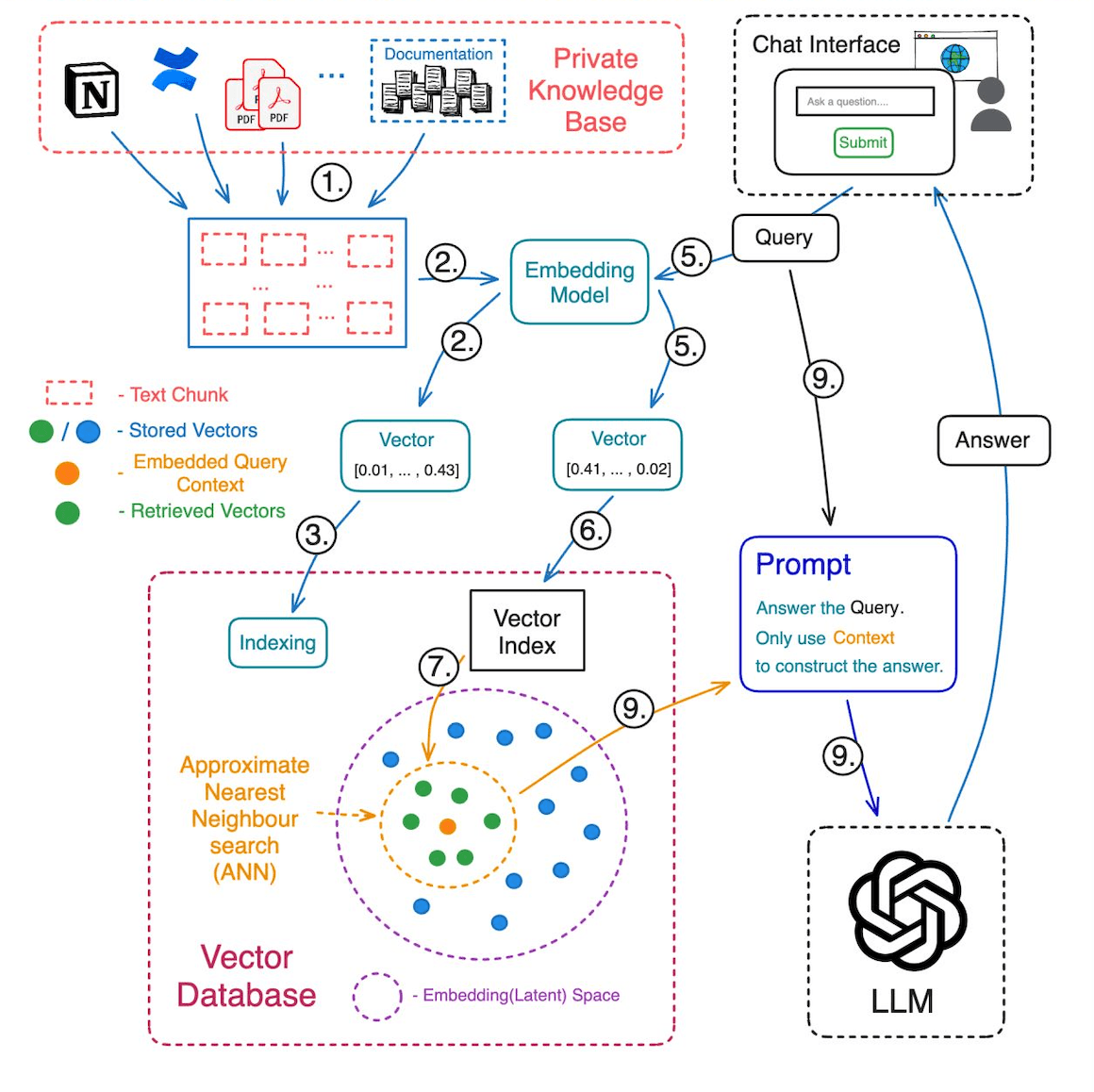

The first step is to break down the entire sentence into smaller "pieces" called tokens. Each token can be a word, part of a word, or a special character (like an exclamation point or question mark). After this breakdown, the LLM (Language Model) stores these tokens as vectors (numbers). This process is called embedding or encoding. All of these vectors are stored in a vector database (think of it like a warehouse).

Why encoding? It's necessary to translate human language into the language of machines so they can understand it.

Since each token is represented by numbers within a vector, mathematical operations can be used to measure the "closeness" of vectors. This is how LLMs understand the meaning of vectors. Tokens with similar meanings or related topics are "arranged" close to each other in the vector space.

For example, the word "dog" will be embedding as [48, 49, 51, 15, 91, 36, 63, 10.1,...], and the word "puppy" will be embedding as [48, 49, 51, 15, 91, 36, 63, 10.2,...]. You can see that the first part of these two vectors are the same, LLM will arrange them close together and will understand that the context of using these two words will also be related. The exact number in each vector and the calculation are too specialized, not suitable here.

Fine-tuning:Fine-tuning is like sending a language model to college to get a degree in a specific field. After learning the basics of language during pre-training, the model is now trained on a smaller, more focused dataset related to a particular task. This helps the model specialize and become an expert in that area.

For example, Imagine that the first stage of LLM will be to finish grade 12, at this stage it will go to university to study a specialized subject. If we wanted the model to become a medical chatbot, we would fine-tune it in medical textbooks, research papers, and patient records. This would teach the model the specific language and terminology used in the medical field, allowing it to provide accurate and relevant information to patients and healthcare professionals.

=> In short:

What happens when you chat with an LLM chatbot?

Let's say you're using a chatbot to summarize a research paper. Here's what happens behind the scenes:

The Importance of Context Windows

Have you ever had a conversation with someone who forgets what you said a few sentences ago? It's frustrating, right? LLMs can have a similar issue. The amount of text they can remember and process at once is called their context window. A larger context window means the model can hold more information in mind, leading to more coherent and relevant responses.

Why it matters:

But there's a catch: Bigger windows need more computer power, which can make things slower and more expensive.

Example: You're chatting with an AI chatbot. You ask it to summarize a long article. With a small context window, the AI might only understand parts of the article and give you a confusing summary. But with a larger context window, the AI can read the whole article and give you a clear, accurate summary.

The Limitations of LLMs

While LLMs are incredibly powerful, they're not perfect. They can sometimes be verbose, providing more information than necessary. They can also struggle with ambiguous prompts and may produce inconsistent results. Perhaps most importantly, LLMs can exhibit biases present in their training data, leading to potentially harmful or discriminatory outputs. It's crucial to be aware of these limitations and use LLMs responsibly.

Stay tuned for more!

This article is just the first step in our journey to explore the vast world of GenAI. Through the basic concepts, operations, and limitations of LLMs, we hope you've gained a more comprehensive overview of this promising technology.

In the upcoming articles, we will delve deeper into Prompt Engineering - the art of controlling LLMs for maximum effectiveness. From basic to complex queries, from batch task processing to data analysis, everything will be explored in detail. And don't forget, we will also learn about building chatbots, training data, and many other practical applications of AI and AI Agents.

Are you ready to step into the new era of AI?

Diaflow is here to accompany you on the journey of discovering and applying AI to your work and life. With a combination of cutting-edge technology and a team of experienced experts, we provide comprehensive AI solutions that help businesses optimize processes, enhance productivity, and create exceptional customer experiences.

Don't miss the opportunity to experience the power of AI. Contact Diaflow today to learn more about our groundbreaking AI solutions and how we can help your business achieve remarkable growth.